Nov 29 2023

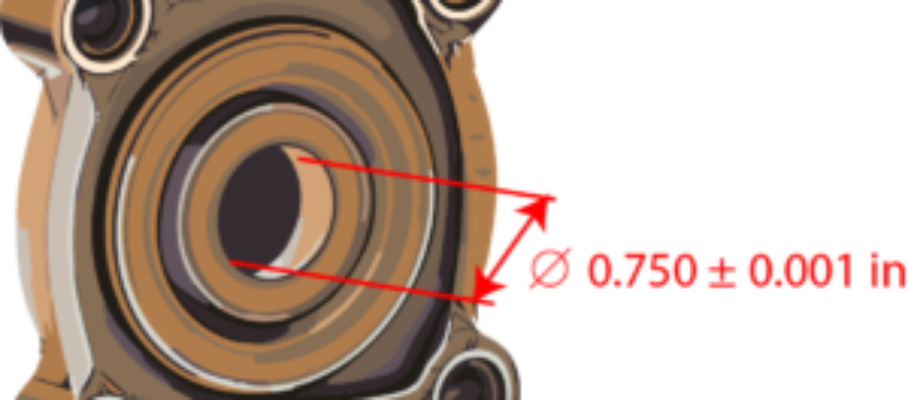

Tolerances

Online forums do not often discuss quality fundamentals like characteristics and tolerances. You find arguments about process capability, but the requirements for process capability are taken as given. Tolerances on characteristics are objectives that production is expected to meet, and deserve an exploration of what they mean and how they are set.

Dec 30 2023

Greatest Hits of 2023

This blog’s greatest hits of 2023:

#greatesthitsof2023, #quality, #VSM, #ValueStreamMap, #deming, #toyota

Share this:

Like this:

By Michel Baudin • Uncategorized • 1 • Tags: Deming, Greatest hits of 2023, Quality, Toyota, Value Stream Map, VSM