Nov 29 2023

Tolerances

Online forums do not often discuss quality fundamentals like characteristics and tolerances. You find arguments about process capability, but the requirements for process capability are taken as given. Tolerances on characteristics are objectives that production is expected to meet, and deserve an exploration of what they mean and how they are set.

Contents

- From Single Values to Tolerances

- Multivariate Tolerances

- Tolerance Stacking

- Tolerance Gaming

- Allowances and Clearances

Quality Characteristics

The literature distinguishes two kinds of quality characteristics:

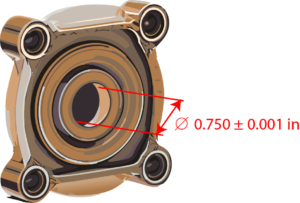

- Measured variables, like the diameter of a hole. Required values are specified in the product design. It can be measured on a completed unit without destroying it, and the unit is defective if the measured value does not match the spec.

- Attributes, that are binary, or go/no-go. A gasket is or isn’t in place, a painted surface is or isn’t free of scratches, a casting leaks or doesn’t…

The bulk of the literature is about individual characteristics. Real products often have many, that cannot be assumed to be independent. You assemble products from components with their own characteristics, and also need to consider how they stack up into characteristics for the whole.

Measured Variables Versus Attributes

A measurement is richer information than an attribute, which is useful when analyzing the process. In daily operations, however, the pass/fail decision on a unit of product at any stage is binary. If it’s based on a measurement, then you are reducing the measurement to an attribute, which is what you do with tolerances.

Originally, the measured variables were purely geometric and called critical dimensions. Even today, much of the literature is about Geometric Dimensioning and Tolerancing (GD&T). Measured variables, however, are anything you can measure on a workpiece without destroying it, like electrical resistivity, pH, concentration of a particular chemical, operating pressure, temperature, etc.

Post-Process Observation

You observe characteristics on finished goods or on WIP between operations inside the process. The primary reason is that the characteristics serve as indicators of the product’s fitness for use, or of the fitness of the workpiece for downstream operations.

When you use this data to control the upstream process, it begs the question of why not do it based on observations during the execution of the process rather than once it is complete. As generally understood, this would be process control, as opposed to Statistical Process Control (SPC).

When Shewhart created SPC 100 years ago, automatic feedback control was in its infancy. You couldn’t capture most process parameters while machines were working. All you could do then was observe and measure workpieces once the process was completed, and that’s what SPC was based on. At that time, humans controlled machine tools by turning cranks and pulling levers. Today, production machines’ embedded and supervisory controllers can sense the status and respond while in process.

It doesn’t mean that dynamically tweaking machine settings to produce a consistent output is always the right solution or that it is easy. It only means that many things that were not technically feasible 100 years ago are today.

Automatically adjusting cuts based on tool wear is a simple example. Running a real-time simulation of the process and adjusting the actual process to sync the simulated and the actual processes, is more challenging, but has been done in the additive manufacturing of metal parts.

Origin in Interchangeable Parts

Among the key contributors to quality in the 20th century, Shewhart was the only one to show an interest in tracing efforts at improving quality to origins in interchangeable parts technology. Writing in 1939, Walter Shewhart acknowledged interchangeable parts technology as a milestone in manufacturing quality, tracing the use of measured variables to 1787, without going into the specifics of who did what. Figures 1 and 2 in Statistical Method from the Viewpoint of Quality Control are quite explicit:

Shewhart’s timeline is a bit hazy, as he acknowledges with question marks. He did not have access in the 1930s to the same detailed documentation we have today, through the books of Ken Alder for the early work in France, and David Hounshell for its continuation in the US. Furthermore, documents from the original developers of the technology, like Honoré Blanc’s memorandum from 1790, are now one click away.

Critical Dimensions and Engineering Drawings

Critical dimensions first appeared with engineering drawings in 18th-century France and became a key element in interchangeable parts technology, as developed in the US in the 19th century. In the picture below, the left side shows the kind of drawings you find in the Diderot Encyclopedia from 1777. They are artistically compelling but technically imprecise, compared with the engineering drawing on the right.

No one would admire the engineering drawing for its beauty, but it features critical dimensions. Gaspard Monge invented this type of drawing about 1765, and it’s so standard now that we tend to forget it didn’t always exist. In Paris, a street, a square, and a subway station bear the name of Monge but few Parisians know why. The Rise of Empires: Ottoman series has a Hungarian cannon maker named Orban showing Mehmet II what looks like an engineering drawing of a gigantic gun in 1453, ~300 years too early.

Gribeauval and His Cannons

Gribeauval started the development of interchangeable parts technology for cannons in the 1760s, and his system was used through the Napoleonic era.

Honoré Blanc and His Muskets

Honoré Blanc continued with muskets. In his 1790 memo, Blanc pleaded for continued support of his work with the legislative assembly of revolutionary France. Five years before, he had shown Ambassador Thomas Jefferson musket locks with interchangeable parts. To highlight the problems he was facing, he wrote this about screws:

“Repairing screws would be less difficult if it weren’t for the fact that, in musket workshops, there aren’t two dies whose taps are exactly the same size, and whose thread pitch is perfectly equal.” Blanc, H. (1790) Mémoire Important sur la Fabrication des Armes de Guerre, Gallica, p. 13

In 2023, anyone with a drawer full of incompatible charging cables for electronics can understand his predicament.

Blanc’s remedy was to specify critical dimensions like length, diameter, and thread pitch, assuming that screws with matching values would interchange.

Relationship with Standards

Quality characteristics, including measured variables, are the object of product standards, which is a whole other topic. The question here is how well a set of measured variables technically predicts quality, understood as the agreement of product behavior with user expectations.

True and Substitute Characteristics

The measured variables are a special case of what Juran calls substitute characteristics. While the true characteristics of a product are user expectations, these cannot always be observed, or their observation may require the object’s destruction.

The proof of a cake is in the eating, but, in the pastry business, you cannot eat all the cakes you make. On the other hand, you can measure their weight, diameter, and sugar content. If any one of these characteristics is wrong, you know the cake is bad, but just because they are all right doesn’t guarantee that the cake is good. Writing in 1980, Philip Crosby missed this key point when he defined quality as “conformance to specifications.”

Choosing Measured Variables

Quality Function Deployment (QFD) is the closest to a general theory of how to do it. It leads you to identify candidate dimensions but stops short of validating them as predictors of product quality.

Quality Function Deployment (QFD)

In the 1960s, Yoji Akao (赤尾, 洋二) and Shigeru Mizuno (水野, 滋) developed Quality Function Deployment (QFD) to map user needs systematically to technical features of products, and eventually numerical characteristics to use for validation. QFD is best known for its House of Quality, in which measured variables appear at the bottom.

Validating Measured Variables

If the product is a car door, the torque to open it is a natural choice. It’s easily measurable in non-destructive ways and is a key part of the user experience of opening and closing a car. It is not always so obvious. If you are making semiconductors, you are building in each chip millions of structures like the one in this picture, with, in 2023, line widths of ~1.2 nm:

Piling up these layers takes hundreds of operations involving multiple loops of patterning by lithography, wet and plasma etching, thin film deposition, solid-state diffusion, and ion implantation. And you don’t know whether you have built a circuit that does anything useful until you can test its functions at the end of the process.

What you can observe in the process is limited to line widths, misalignments, film thicknesses, dielectric constants, refractive index,… all variables with limited predictive power on the functioning of the finished circuit, and complex interactions between variables measured at different layers.

May and Spanos’s 2006 book Fundamentals of Semiconductor Manufacturing and Process Control lists variables that can be measured at the end of each operation on transistors within a chip. NVIDIA’s 2023 GPU chips each contain ~80 billion transistors. They are made on Silicon wafers that are 300 mm in diameter, and each carries ~70 chips. As the variables are difficult to capture, you are limited to minuscule samples.

This is clearly a situation that calls for different thinking than torques to open car doors. For the metrology of just ion implantation, one of the processes in chipmaking, see Michael Current’s 2014 article.

Requirements on Measured Variables

If we take a step back from examples, we can see general requirements for measured variables:

- Existence. A measured variable should either be a parameter of the product design or a quantity that can be derived from the design. The door-opening torque or the weight of a cereal box are design parameters. The sheet resistivity of a silicon wafer after ion implantation is a predictable consequence. It’s not part of the design but it is derived from it. The key point is that the dimension exists in the physicochemical model of the process.

- Observability. For a quantity to work as a measured variable, you must be able to collect it. You need an instrument that gives you an approximation or bounds on its value.

- Relevance. Just because a variable is well-defined and observable does not mean it is relevant to the quality of the product. You establish it by screening and Response Surface Methodology (RSM) where it is not obvious. It combines process knowledge, Design Of Experiments (DOE), and data mining.

For geometries, a whisker switch can give you an upper bound on the height of a product for every unit passing under it, spindle probes in a machining center can take measurements while a unit is still in it, or you can take a unit to a Coordinate Measurement Machine (CMM) and measure a large number of dimensions on selected units.

The completeness of the data, the investment required, and the impact on production operations vary. And the performance of sensors, instruments, and data acquisition systems improves over time, changing the parameters of the choices.

Quality Performance and Substitute Characteristics

These concepts, in a general way, can be expressed in terms of conditional probabilities. If U is the proposition that the reality of the product unit meets user expectations, S_i the logical proposition that it meets requirements for the i-th substitute characteristics for i=1,\dots,n, and \overline{S_i} that it doesn’t. Then we have the following:

- P\left ( U |\overline{S_i} \right ) = 0 for all i from 1 to n. If the unit fails any of the substitute characteristics, we are certain it won’t meet user expectations.

- P\left ( U | S_1, \dots, S_n \right ) > 0 and increases with n. If it passes all, there is a positive probability of meeting expectations, which rises as we check more substitute characteristics.

JD Power’s Initial Quality Studies

Whether the buyer of a new car reports a problem to the dealer within 90 days of the purchase is a substitute characteristic of finished cars that car makers watch through the JD Power and Associates Initial Quality Studies (IQS). It’s not the true characteristic but it’s a component of it that we can estimate and relate to measured variables.

Initial Car Quality Is Not That Great

In 2022, Buick was the best-performing brand, with an average of 1.39 problems reported per unit, and this is for vehicles that all meet the tens of thousands of specs defined for its ~30,000 subassemblies and components.

If V is the proposition that a new Buick buyer has no problem to report in the first 90 days after purchase and \left (S_1,\dots,S_{100,000} \right ) represents success against all the checks applied in the manufacturing process, then the average of 1.39 problems per unit works out to

P\left (V|S_1,\dots,S_{100,000 } \right )= e^{-1.39} = 25\%

In other words, after all the measurements, go/no-go gauge checks, visual inspections, and functional tests carried out during the manufacturing process on ~30,000 parts, the buyer of the best-performing brand in the 2022 IQS only has one chance in four of not reporting a problem in the first 90 days after purchase!

And this is only a subset of the expectations of a new car buyer over total time of ownership. This happens in an industry where parts are commonly produced with single-digit rates of defective parts per million (dppm). This is a dramatic example of the shortcomings of Crosby’s definition of quality as “conformance to requirements.”

Is It Improving?

The following table shows the best brand IQS ratings since 2003. The best in this case is more interesting than the average, as an indicator of what the industry is capable of. IQS ratings have gone in the wrong direction since 2019, when Hyundai’s luxury brand Genesis achieved and IQS rating of 0.63, corresponding to a probability not reporting any problem in the first 90 days of ownership at 53%.

Two salient features of this data are (1) that the catastrophic drop in performance since 2019 coincided with the COVID-19 pandemic, and (2) that Japanese brands lost their dominance since the 2000s. No Japanese brand has held the top spot since 2012.

It should be noted that cars have changed between 2003 and 2023, particularly in their electronics, and that the new infotainment systems have been a source of customer complaints. Still, the IQS numbers were moving in the right direction until 2019, in spite of the new electronics, and the drop in performance since 2019 coincides with the COVID-19 pandemic.

Car Paint

The paint is the first thing prospective buyers see on a car, and car makers are keen to impress them with its beauty. Painting, however, is a process they still struggle with. They paint car bodies with doors attached, then separate them from the body for subassembly, and rejoin them later, taking pains to ensure they reassemble the doors with the car they came from.

Why? Doors for the same model in the same color should be interchangeable, and mounting the door from car A onto car B and vice versa should make no difference. In reality, however, it does. Even though cars A and B are supposed to be the same color, there are subtle differences in nuance between the two that customers won’t notice on a whole car but that observant buyers will notice when doors are cross-mounted, raising doubts about the overall quality of the car.

This means that, even though industry has been painting cars for 140 years, full interchangeability of painted parts is still elusive!

From Single Values to Tolerances

The validation of a measured variable is rarely in terms of an exact match of measurement to spec but in terms of the presence of the measurement in an interval called tolerance.

Origin and Spread of Tolerances

Working on musket locks in the 1780s, Honoré Blanc recognized early on that specifying single values for critical dimensions was impractical, and that variations within a sufficiently small range did not impair interchangeability.

Yet Shewhart reports tolerances as being first introduced ca. 1870. This says that the concept of tolerance took decades to take root in industry. David Hounschell quotes angry memos exchanged between production managers and inspectors in the 1870s at Singer Sewing Machines. The production managers insisted that parts were close enough to specs; the inspectors rejected them for being off-spec. This is exactly the kind of conflict tolerances were supposed to avoid.

Measurement Instruments

One possible reason for the slow uptake of tolerances was the imprecision of 18th-century instruments. If the measurements are blurry, only defective parts will produce discrepancies large enough to notice. Contemporary forging instructions said the metal should be “cherry red”; they didn’t have thermometers working in that range of temperatures.

This logic broke down as instruments developed the ability to detect discrepancies small enough not to affect function. It forced manufacturers to decide which discrepancies were too large to ignore.

|

Year |

Instrument |

Resolution |

|

1631 |

Vernier caliper |

25.400μ |

|

1776 |

Micrometer |

.250μ |

|

Today |

Laser micrometer |

.001μ |

What Resolution/Accuracy/Precision Do We Need?

The literature distinguishes between the resolution of an instrument – the number of digits it gives you, its accuracy – its ability to neither over- nor underestimate, and its precision – its ability to give you the same value when measuring the same object multiple times.

A bathroom scale shows your weight on a dial, in whole pounds, or tenths of a pound. That’s its resolution. You find that the scale at the doctor’s office gives you 2 lbs less than the one at home, which means that one of the two in inaccurate. If you step on the same scale multiple times and it gives you results that vary within ±0.5 lbs, it is its precision.

In daily operations, you want to trust that measurement instruments are not leading you astray and, for this, you want the measurement errors to be so small that you can handle the measurements as if they were exact values. Then, they show differences between workpieces rather than noise from the measurement instrument.

Suppose you will do calculations on the measurements and are interested in a result within ±0.1 mm. In that case, you need to start with measurements to ±0.01 mm or ±0.001 mm to keep rounding errors from influencing the result.

Looking After the Instruments

You must periodically check and calibrate them. Even in organizations where quality is understood to be part of every employee’s job and data acquisition is automated, this particular task is done by specialists from the Quality Department, trained in methods like Gauge Repeatability and Reproducibility (Gauge R&R) and Measurement System Analysis (MSA).

Measurement Issues in Operations

The first step in investigating a problem usually is to check the instruments and the use of the data. And the problems you encounter when doing so are often not subtle.

Rolling Mill Example

The plant used a 100-year-old rolling mill to turn 3-in thick slabs of lead into plates 1/16th in thick, used in roofing. While rolling, the plates systematically deviated from the center of the mill. The engineer thought it was due to slight differences in the gap between rolls on both sides of the machine, and organized the operators to collect thicknesses at all four corners of the plate. At first, the measurements showed no difference between the sides of the plates.

Examining the manually collected data, the engineer noticed that the operators had recorded two significant digits when the measurement instruments provided four. The operators had rounded off the last two. A second campaign of measurement, recording all four significant digits, revealed that there was indeed a difference in gap height between the two sides. They solved the problem by replacing the control system for the gap between rollers.

Semiconductor Test Example

Solid state image sensors include a “light shield” layer that manufacturers test for a voltage called “V to Light Shield.” In one case, the yield of this test suddenly collapsed from 100% to about 65%. The historical data revealed that it had been 100% only for the past three months, and the earlier data were consistent with the 65% level.

No process change explained the jump to 100% three months earlier. No one had paid attention to this one because it was one of many tests performed on the chips, and the 100% yield did not set off alarms.

However, retests of available parts from that period confirmed that the “100%” figure had been wrong. The conclusion was that, for three months, the test equipment had failed to connect with the chips, and that the yield had been low all along.

We Only Get an Interval Anyway

An instrument that tells you that the length of a rod is 250.00 mm is bracketing this length to ±5μ. It tells you it is in the interval [249.995, 250.005]. In your model, the rod has a length that, with more precise instruments, you can bracket more closely, but you never get to the number.

It’s a reality that you can easily get an “exact” value if the spec is written with two significant digits and your instrument doesn’t give you any more. If the instrument reads “250.00 mm,” you may see it as an exact match, but it’s an illusion.

Physical quantities like length, mass, resistivity, or oxygen content are parameters of a theoretical model of the workpiece. Such quantities are what mathematicians invented real numbers for. Both measurements and specs, with their significant digits, are rational numbers. They can be arbitrarily close to the actual values but never match them.

Measurements versus go/no-go gauges

When making musket locks with interchangeable parts. in the 1780s, Honoré Blanc was aware of these issues. Rather than measure each dimension with an instrument, he developed a set of go/no-go gauges to check that dimensions were within an interval.

You lose information when you use go/no-go gauges instead of measurements. Instead of a number, you only get at most one bit. For this reason, the founders of statistical quality control considered measurements preferable to attributes. As discussed above, this matters for analysis but not when making pass/fail decisions in daily operations.

Measurements required instruments, labor, and time. For this reason, operators couldn’t practically measure every unit, so they took measurements on samples. Go/no-go checks, on the other hand, are quick enough to perform on every unit.

Then, it’s not obvious that you get more information from measurements on samples than from go/no-go checks on all units. Later, automatic data acquisition from sensors changed this game, by enabling the measurement of 100% of the parts without slowing down production.

Two Ways of Expressing Tolerances

You find tolerances expressed either as intervals \left [ L,U \right ] in operator work instructions or as targets with a band on each side, in the form T \pm C in engineering specs.

Checking that a measurement is between L and U is easier for a production operator than subtracting T from the measurement and verifying that the result is within \pm C.

Of course, today, you are less likely to have the operator read a number on the instrument than to have the instrument turn on a green or red light, automatically set aside failed parts, raise an alarm and stop the production line.

In engineering, on the other hand, there is a difference between the two forms, even if L = T-C and U = T+C. The interval format suggests that it makes no difference where in the value falls between between L and U, whereas the T \pm C form suggests that T is a target value, that \pm C bounds the deviation from target, and that, within these bounds, values closer to T are better. This is the root of the Taguchi method.

Multivariate Tolerances

When you have two measured variables X_1 and X_2 , their tolerance intervals form a rectangle in the X_1X_2 plane, such that a part conforms to specs if and only if the point (x_1,x_2) of its measurements is within this rectangle. With three critical dimensions, we have a rectangular prism instead of a rectangle, which we can also visualize:

The Tolerance Hyperrectangle

The same geometrical thinking applies with more than 3 measured variables, but we cannot visualize the resulting hyper-rectangle.

An automatic car transmission case has about 2,250 critical dimensions X_1, \dots, X_{2,250}, with a tolerance interval I_k for each. If we use the notation \mathbf{X} = (X_1, \dots, X_{2,250}) to summarize all the definitions of the critical dimensions and \mathbf{x} = (x_1, \dots, x_{2,250}) for the measurements taken on a unit, then the conformance of this unit to the specs can be expressed as \mathbf{x} \in I_1\times\dots\times I_{2,250} , the tolerance hyperrectangle.

This model works when the measured variables are independent. In a car, this is true of a piston’s diameter and the shock absorber’s length.

Shmoos

Shmoos

Semiconductors, however, are a different story. In the late 1970s, when engineers analyzed the measured variables of chips that passed their functional tests, they found that the space of valid combinations of values were not hyperrectangles but “hyperpotatoids” of varying shapes that they chose to call “shmoos,” after a character from Al Capp’s Li’l Abner comic strip from the 1940s.

If the hyperrectangle is inside the shmoo, you fail units that would pass the test. If it’s outside, you pass units that would fail the test. , you do both if it intersects with both the inside and the outside of the shmoo.

Two special cases are of interest:

If the shmoo is inside the hyperrectangle, then each measured variable will only filter out gross deviations. Then you need final test to weed out the parts that are within all tolerances but don’t work. This is the prevalent situation in the semiconductor industry.

If, on the other hand, you could make chips so that all parts fit within a hyperrectangle inside the shmoo, they would all pass the test. The problem is that the dynamics of the semiconductor industry do not allow this to happen. To get there, it might take ten years of engineering from product launch, but the product is obsolete in four, after which the engineers start over with the next generation.

This means that the tolerance hyperrectangle is simplistic, but today’s machine learning provides many techniques that take into account the interactions between measured variables that shape the shmoo.

At any point in the process, you have the measurements that have been taken at all upstream operations, and you need to decide whether to pass the part on for further processing or scrap it. Passing it on means putting more labor, materials, and energy into it in order to collect the revenue from selling it if it passes final test. If a model gives the probability of passing final test given the measurement values already collected, you can base your decision on the expected payoff.

Tolerance Stacking

How do tolerances on individual parts stack up to tolerances on an assembly of these parts? Oddly, the only treatments I could find of this obviously important topic were in publications from manufacturing companies, Western Electric (1956) and Bosch (2022).

Variability and Tolerances

There are two distinct problems to consider:

- Inferring the variability of an assembly from that of its parts.

- Setting tolerances on the parts to meet the required tolerances on the assembly.

The first problem is easily solved in terms of standard deviations when the characteristics of the parts are independent, and each have a mean and standard deviation. No further assumptions are needed.

The formulas we will explain below show the standard deviation of the assembly as the root of a weighted sum of squares (RSS) of the standard deviations of the component characteristics. Where these formulas can’t be used, you can combine simulations of the part characteristics to simulate the distribution of the assembly’s characteristic.

The second problem is more complex than the first because it touches on process capability. If the tolerance for part i is of the form \mu_i \pm c\sigma_i with the same c for all the i = 1,\dots,k, then the corresponding tolerance for the assembly characteristic Y will also be of the form \mu_Y \pm c\sigma_Y.

If we start from the tolerance for Y, then the formulas give us constraints on the tolerances for the k characteristics of parts that go into Y.

Parts in Series

You have this problem when you connect resistors in series or stack four 1.5 V batteries in a device:

We’ll use the example of three rods and their lengths:

Most engineers believe that if, as in the following picture, you assemble three rods each with length within 1 ft ± 1 mil, then the best you can say about the assembly is that its length is within 3 ft ± 3 mils. In other words, whatever tolerance we want on the three-rod assembly, we should make the tolerances three time tighter on the individual rods. This is called the Worst-Case approach to tolerance stacking. The problem with it is that it is pessimistic.

Original Treatment from the 1950s

As was first recognized in the Western Electric Statistical Quality Control Handbook (C-4, pp. 122-125), the lower and upper bounds of a tolerance interval are not additive. The centers of the tolerance intervals add up but their bounds don’t. For the assembly to be 3 ft + 3 mils long would require each rod to be at the upper bound of 1 ft + 1 mil. The conjunction of these three events is clearly less likely than any one of them individually.

Up to this point, we have discussed only requirements expressed as tolerances, but we have assumed nothing about the measured variables. The solution offered in the Western Electric handbook is based on the following assumptions:

- The total length Y = X_1 +\dots+ X_k where X_i is the length of the i-th rod.

- The X_i are independent random variables.

- Each of the X_i has an expected value \mu_i and standard deviation \sigma_i.

- The tolerance interval for each X_i is set at \mu_i \pm c\times\sigma_i where the multiplier c is the same for all i = 1\dots k.

Note that the X_i are only required to be independent. They not required to be Gaussian and they are not required to have identical distributions. It is sufficient for the variances of the X_i to add up, so that the variance \sigma^2 of Y is

\sigma^2 = \sigma^2_1 +\dots+ \sigma^2_k

and the standard deviation

\sigma = \sqrt{\sigma^2_1 +\dots+ \sigma^2_k}

Since the expected value of Yis \mu = \mu_1+\dots+\mu_k, it is consistent with the tolerance setting for the X_i to set the tolerances for Y at \mu\pm c\times\sigma.

The tolerance interval for the 3-rod assembly is therefore 3\, \text{ft} \pm \sqrt{1 + 1 + 1} \,\text{mils} = 3 \,\text{ft} \pm 1.732\, \text{mils}, which is just above half of what the direct addition gives you.

Since tolerances are requirements, you start from the requirements on the assembly. It gives you the maximum standard deviation it is allowed to have. Then the constraints on the \sigma_1,\dots,\sigma_k are that they must be positive and that the vector \left (\sigma_1,\dots,\sigma_k \right ) must be inside the sphere centered on 0 and of radius \sigma.

Parts may contribute to more than one characteristic of the assembly, which may create more constraints.

Generalization

The term “tolerance stacking” suggests a simple example like the rods. Bosch published a more elaborate and current treatment in 2022. It applies to resistors in parallel as well as resistors in series and many other, more realistic cases:

In a more general case, a characteristic Y of the assembled product is a function f of the vector \mathbf{X} = \left ( X_1,\dots,X_k \right ) of the characteristics of its k components:

Y= f\left ( \mathbf{X} \right )

For resistors in series,

Y_S= f_S\left (R_1, R_2\right )= R_1 + R_2

This is the special case treated above. For resistors in parallel, on the other hand,

Y_P= f_P\left (R_1, R_2\right )= \frac{R_1 R_2}{R_1 + R_2}

In a working assembly process, we can assume that Yand \mathbf{X} are subject to small fluctuations. For geometric dimensions, we are talking about deviations in mils from targets in inches.

Y =\mu_Y + \Delta Y with \Delta Y having 0 mean and standard deviation \sigma_Y, while and \mathbf{X} =\left (\mu_1 + \Delta X_1, \dots, \mu_k + \Delta X_k \right ), with the \Delta X_i independent, with 0 mean and standard deviations \sigma_i.

If f has a gradient at \mathbf{ \mu_X} = \left ( \mu_1,\dots,\mu_k\right ), and \Delta\mathbf{X} =\left (\Delta X_1, \dots,\Delta X_k \right ), then

\Delta Y \approx \text{grad}\left [ f\left (\mathbf{ \mu_X} \right ) \right ]\cdot \Delta\mathbf{X} = \frac{\partial f }{\partial x_1}\times \Delta X_1 +\dots+ \frac{\partial f }{\partial x_k}\times \Delta X_k

Then, since the terms of this sum are independent, their variances add up, and

\sigma_Y^2 = E\left (\Delta Y^2 \right )\approx \left (\frac{\partial f }{\partial x_1} \right )^2\sigma_1^2 +\dots+ \left (\frac{\partial f }{\partial x_k} \right )^2\sigma_k^2

This is known as the Gaussian law of error propagation, applied here to deviations from target rather than measurement errors. It does not require the variables involved to follow Gaussian distributions. Gauss worked on many topics, not just the distribution that is often called “Normal.” It’s brilliant! It provides a simple, applicable solution to a problem that otherwise looks daunting. We can see this with just two resistors in parallel.

In general f is not as simple as f_P above, but the process engineers should be able to provide values for it and its gradient, form formulas, algorithms, or simulations.

Example

For two resistors in parallel, if

\alpha_1\left ( R_1,R_2 \right ) = \frac{\partial f_P}{\partial R_1} = \frac{R_2^2}{\left ( R_1+R_2 \right )^2}

and

\alpha_2\left ( R_1,R_2 \right ) = \frac{\partial f_P}{\partial R_2} = \frac{R_1^2}{\left ( R_1+R_2 \right )^2}

then

\sigma_R = \sqrt{\alpha_1\left ( R_1, R_2 \right )^2\times \sigma_1^2 + \alpha_2\left ( R_1, R_2 \right )^2\times \sigma_2^2}

Plugging R_1 = 47 \pm 0.8\, \Omega and R_2 = 68 \pm 1.1, \Omega into this formula, the Bosch document comes up with R = 27 \pm 0.3, \Omega for the two resistors in parallel.

Special Cases

In the special case discussed earlier,

f\left ( \mathbf{X} \right ) = X_1+\dots+X_k

and all the \frac{\partial f}{\partial x_i} = 1, and \sigma_Y^2 = \sigma_1^2 + \dots + \sigma_k^2 as above.

If f is linear in \mathbf{X}, then f\left ( \mathbf{X} \right ) = \alpha_1X_1+\dots+\alpha_kX_k for some coefficients \alpha_i. Then

\sigma_Y = \sqrt{\alpha_1^2\sigma_1^2 + \dots + \alpha_k^2\sigma_k^2}

which means that, to achieve a given \sigma_Y, \mathbf{\sigma_X} = \left ( \sigma_1,\dots,\sigma_k \right ) needs to be inside the ellipsoid defined by this equation.

If f is not linear, then the \frac{\partial f}{\partial x_i} vary with both \mathbf{\mu_X} and \mathbf{\sigma_X}, and so does \sigma_Y.

When Reality Does Not Meet the Assumptions

We can assess \sigma_Y from the \sigma_i, i = 1,\dots,k but the straightforward translation into a tolerance interval for Y is predicated on the assumption mad above that the tolerance interval for each X_i are of the form \mu_i \pm c\times\sigma_i where the multiplier c is the same for all i = 1\dots k.

When given formulas, engineers are prone to use them without questioning underlying assumptions too deeply. They are particularly so when issuing requirements to suppliers. For those who want to be more thorough, the Bosch document has 56 pages of details on options to use when the X_i are not Gaussian.

Statistical Tolerancing Today

The most astonishing aspect of statistical tolerancing is how few engineers have heard of it. It has been around for decades. 70 years ago, Western Electric taught it to suppliers. Today, Bosch does. Most of the literature is limited to geometry. It is, however, relevant to electrical or chemical characteristics as well.

Tolerance Gaming

Fallible human beings set tolerances and occasionally yield to the temptation of manipulating the limits. While widely believed in the US to be an example of punctuality, German trains are, in fact, chronically late. To improve on-time arrival performance, the Deutsche Bundebahn (DB) changed the definition of on-time arrival from within ±5 min of schedule to ±10 min. This is according to Prof. Meike Tilebein from the University of Stuttgart.

Another form of gaming is to alter the product’s behavior when under test. This happened in the VW diesel emissions scandal of 2015.

Allowances and Clearances

Tolerances have two lesser-known siblings:

- Allowances. If you want a metal bushing in a hole, it needs to have a slightly larger diameter than the hole. To install it, you freeze it in liquid nitrogen to shrink it. Once cold, it slides into the hole and expands back as it returns to room temperature.

- Clearance. This is a minimum distance between objects. It’s a single-sided requirement, often from an external mandate, like the local fire code.

The bushing diameter must be small enough to slide into the hole when frozen. It must also be large enough to sit firmly inside when warm. This range, or allowance, is a technical requirement of the process. It is not a deviation from a target, as in a tolerance.

Conclusions

Tolerances are a well-established and useful concept. Yet, when you scratch the surface, applying is not as straightforward as you might expect. In particular, the complexity of products explodes along with ability to measure more product characteristics.

References

- Alder, K. (2010). Engineering the Revolution: Arms and Enlightenment in France, 1763-1815. United Kingdom: University of Chicago Press.

- Blanc, H. (1790) Mémoire Important sur la Fabrication des Armes de Guerre, (Important Memorandum on the Manufacturing of Weapons of War). National Library of France.

- Cogorno, G. R. (2011). Geometric Dimensioning and Tolerancing for Mechanical Design 2/E. Ukraine: McGraw Hill LLC.

- Cox, N. D. (1986). How to Perform Statistical Tolerance Analysis. Milwaukee: ASQC Quality Press.

- Hounshell, D. (1984). From the American System to Mass Production, 1800-1932: The Development of Manufacturing Technology in the United States. United Kingdom: Johns Hopkins University Press.

- Juran, J. M. (1995). A History of Managing for Quality: The Evolution, Trends, and Future Directions of Managing for Quality. United States: ASQC Quality Press.

- May, G. S. & Spanos, C. J. (2006). Fundamentals of Semiconductor Manufacturing and Process Control. Germany: Wiley.

- Small, B.B. (Ed.) (1956). Statistical Quality Control Handbook. United States: Western Electric.

- Statistical Tolerancing (2022). Quality Management in the Bosch Group Technical Statistics Booklet No. 5, Edition 02.2022

- Scholz, F. (2007). Statistical Tolerancing, STAT498B, University of Washington

- Shewhart, W. A. (1939). Statistical Method from the Viewpoint of Quality Control. United States: Dover Publications.

#quality, #characteristics, # tolerances, #measuredvariable, #attributes

November 29, 2023 @ 5:51 pm

Fantastic article! So many great topics are covered. Each of these topics provides the basis for a great discussion. Has car quality gone down or are expectations significantly higher? Technology increases complexity while also making it easier to control processes.

Very few manufacturing companies focus on these issues and management seldom understands them. Thank you for the article.

November 29, 2023 @ 9:59 pm

Excellent and very good article. Lots of detailed data and examples. One question: What is your source for the go/no-go gauges of Honore le Blanc and Springfield? I would like to use them for my own book, too 🙂

November 30, 2023 @ 3:44 am

I have added the provenance of these pictures to the figure captions.

November 30, 2023 @ 7:09 am

Michel,

Enlightening as usual. However you can do SPC is real time if you so wish, it does not have to be after-the-fact, no more so than the value you get.

However, how do you go through all this without a discussion of operational definitions?

Be well

November 30, 2023 @ 8:13 am

Thanks, Lonnie. I was hoping to hear from you.

Could you elaborate on what you mean by “SPC in real time”?

The omission of operational definition is , indeed, a mistake. Could you write a few paragraphs about it? I would be happy to include them, with attribution.

Tolerances | Lean Office .org

November 30, 2023 @ 7:52 am

[…] post Tolerances appeared first on Michel Baudin's […]