Jan 29 2024

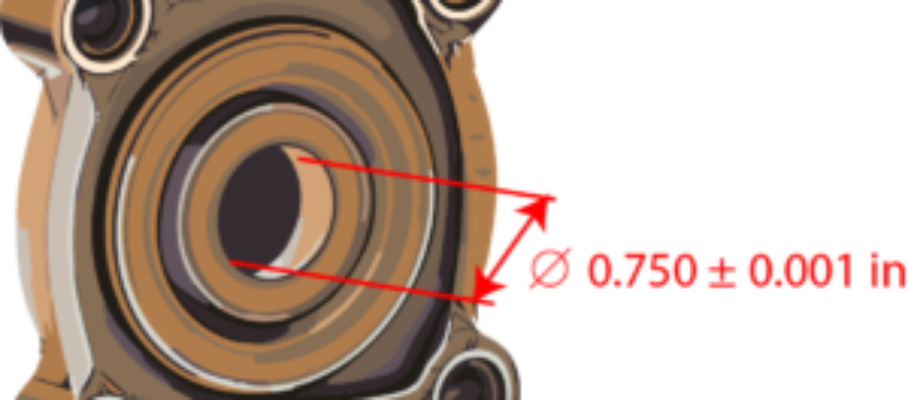

Measurement Errors

Like spouses in murders, errors are always the prime suspect when measurements go awry. As soon Apollo 13 had a problem, a Mission Control engineer exclaimed, “It’s got to be the instrumentation!”

It wasn’t the instrumentation. In general, however, before searching for a root cause in your process, you want to rule out the instrumentation. For that, you need to make sure it always gives you accurate and precise data.

Mar 5 2024

Process Control and Gaussians

The statistical quality profession has a love/hate relationship with the Gaussian distribution. In SPC, it treats it like an embarrassing spouse. It uses the Gaussian distribution as the basis for all its control limits while claiming it doesn’t matter. In 2024, what role, if any, should this distribution play in the setting of action limits for quality characteristics?

Continue reading…

Contents

Share this:

Like this:

By Michel Baudin • Data science, Technology • 1 • Tags: Control Charts, Control Limits, FMEA, gaussian, Normal distribution, pFMEA, process control, Quality, SPC