Jul 9 2012

What are standards for?

- Avoid unnecessary standards.

- Have the standards include a transparent and simple process for improving them.

Avoiding unnecessary standards

Unnecessary or counterproductive standards

Necessary standards

- System of units. In US plants of foreign companies, it is not uncommon to encounter both metric and US units. Companies should standardize on one system of units and use it exclusively.

- Technical parameters of the process, such as the torque applied to a bolt, or die characteristics in injection molding, diecasting, or stamping.

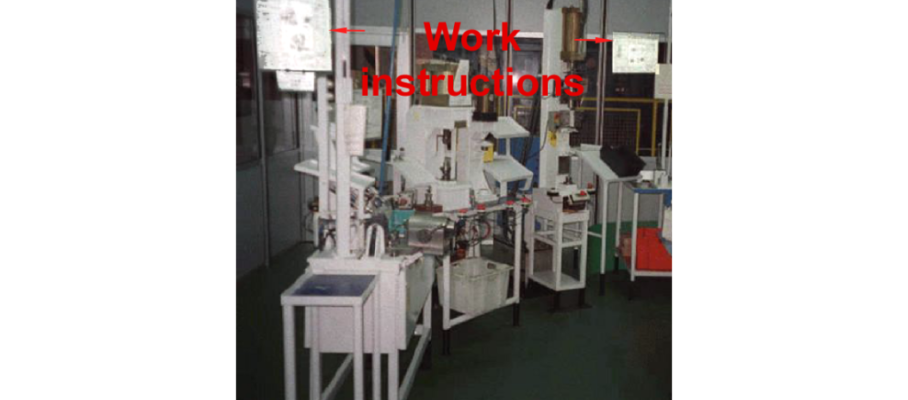

- Instruction sheet formats. Supervisors who monitor the work with the help of instruction sheets posted above each station need to find the same data is the same location on the A3 sheet everywhere.

- Operator interfaces to machine controls. Start, and emergency stop buttons should look alike and be in consistent locations on all machines. So should lights, sounds, and messages used for alarms and alerts.

- Andon lights color code. Andon lights are useless unless the same, simple color code is used throughout the plant, allowing managers at a glance to see which machines are working, idle, or down.

- Performance boards for daily management. Having a common matrix of charts across departments is a way to ensure that important topics are not forgotten and to make reviews easier. For a first-line manager, for example, you may have columns for Safety, Quality, Productivity and Organization, and rows for News, Trends, Breakdown by category, and Projects in progress.

Corporate Lean groups and standards

Question 1: How to boil water?Answer 1: Take a pot, fill it up with water, place it on the stove, turn on the burner, and wait.Question 2: How to boil water, when you already have a pot of cold water on the stove?Answer 2: Empty the pot, put it away, and you are back to Question 1.

Instead of trying to develop and enforce a standard, one-size-fits-all methodology for all of a company’s plants — whose processes may range from metal foundry to final assembly — the corporate Lean group should instead focus on providing resources to help the plant teams develop their skills and learn from each other, but that is a different discussion.

Process for improving standards

When a production supervisor notices that an operator is not following the standard, it may mean that the operator needs to be coached, but it may also mean that the operator has found a better method that should be made the standard. But how do you make this kind of local initiative possible without jeopardizing the consistency of your process? The allowed scope for changes must be clear, and there must be a sign-off procedure in place to make them take effect.

Management policies

I remember an auto parts plant in Mexico that had dedicated lines for each customer. Some of the customers demanded to approve any change to their production lines, even if it involved only moving two machines closer, but other customers left the auto parts maker free to rearrange their lines as they saw fit as long as the did not change what the machines did to the parts. Needless to say, these customers’ lines saw more improvement activity than the others.

In this case, the production teams could move the torque wrench closer to its point of use but they could not replace it with an ordinary ratchet and a homemade cheater bar. The boundary between what can be changed autonomously and what cannot is less clear in other contexts. In milling a part, for example, changing the sequence of cuts to reduce the tool’s air cutting time can be viewed a not changing the process but, if we are talking about deep cuts in an aerospace forging, stresses and warpage can be affected by cut sequencing.

If a production supervisor has the authority to make layout or work station design changes in his or her area of responsibility, it still must be done with the operators, and there are several support groups that must be consulted or informed. Safety has to bless it; Maintenance, to make sure that technicians still have the required access to equipment; Materials, to know where to deliver parts if that has changed. Even in the most flexible organizations, there has to be a minimum of formality in the implementation of changes. And it is more complex if the same product is made in more than one plant. In the best cases, when little or no investment is required, the changes are implemented first, by teams that include representations from all the stakeholders, and ratified later. We can move equipment on the basis of chalk marks on the floor, but, soon afterwards, the Facilities department must have up-to-date layouts.

The more authority is given to the local process owners, the easier it is to implement improvements, but also the more responsibility upper managers assume for decisions they didn’t make. The appropriate level of delegation varies as Lean implementation progresses. It starts with a few, closely monitored pilot projects; as the organization matures and develops more skills, the number of improvement projects explodes, and the local managers develop the capability to conduct them autonomously. At any time, for the upper managers, it is a question of which decisions pass the “sleep-at-night” test: what changes can they empower subordinates to make on their own and still sleep at night?

Generating effective standards

If there is a proven method today to document manufacturing processes in such a way that they are actually executed as specified, it is Training Within Industry (TWI). The story of TWI is beginning to be well-known. After being effective in World War II in the US, it was abandoned along with many wartime innovations in Manufacturing, but lived on at Toyota for the following 50 years before Toyota alumni like John Shook revived it in the US.

There are, however, two limitations to TWI, as originally developed:

- It is based on World War II information technology. It is difficult to imagine, however, that if the developers of TWI were active today, they would not use current information technology.

- It includes nothing about revision management. There is a TWI Problem-Solving Manual (1955), and solving a problem presumably leads to improving the process and producing a new version of job breakdown, instructions, etc. This in turn implies a process for approving and deploying the new version, archiving the old one and recording the date and product serial numbers of when the new version became effective.

Revision management

The developers of TWI may simply have viewed revision management as a secondary, low-level clerical issue, and it may have been in their day. The pace of engineering changes and new product introduction, however, has picked up since then. In addition, in a Lean environment, changes in takt time every few months require you to regenerate Yamazumi and Work Combination charts, while Kaizen activity, in full swing, results in improvements made to thousands of operations at least every six months for each.

In many manufacturing organizations, the management of product and process documentation is slow, cumbersome, and error-prone, particularly when done manually. Today, Product Documentation Management (PDM) is a segment of the software industry addressing these issues. It is technically possible to keep all the standards, with their revision history, in a database and retrieve them as needed. The growth of PDM has not been driven by demands from the shop floor but by external mandates like the ISO-900x standards, but, whatever the reasons may be, these capabilities are today available to any manufacturing organization that chooses to use them.

Using software makes the flow of change requests more visible, eliminates the handling delays and losses associated with paper documents, allows multiple reviewers to work concurrently, but it does not solve the problem of the large number of changes that need to be reviewed, decided upon, and implemented.

This is a matter of management policies, to cover the following:

- Making each change proposal undergo the review process that it needs and no more than it needs.

- Filtering proposals as early as possible in the review process to minimize the number that go through the complete process to ultimately fail.

- Capping the number of projects in the review process at any time.

- Giving the review process sufficient priority and resources.

Templates

In principle, revision management can be applied to any document. In practice, it helps if the documents have a common structure. If they cover the same topics, and the data about each topic is always in the same place, then each reviewer can immediately find the items of interest. This means using templates, but also walking the fine line to avoid turning into DeMarco’s template zombies.

If you ask a committee of future reviewers to design an A3 form for project charters, it will be a collection of questions they would like answered. Accountants, for example, would like to quantify the financial benefits of projects before they even start, and Quality Assurance would like to know what reduction in defective rates to expect… Shop floor teams can struggle for days trying to answer questions for which they have no data yet, or that are put in a language with acronyms and abbreviations like IRR or DPMO that they don’t understand. More often than not, they end up filling out the forms with text that is unresponsive to the questions.

The teams and project leaders should only be asked to answer questions that they realistically can, such as:

- The section of the organization that is the object of the project, and its boundaries.

- The motivation for the project.

- The current state and target state.

- A roster of the team, with the role of each member.

- A crude project plan with an estimate for completion date.

- A box score of performance indicators, focused on the parameters on the team performance board that are reviewed in daily meetings.

The same thinking applies to work instructions. It takes a special talent to design them and fill them out so that they are concise but sufficiently detailed where it matters, and understood by the human beings whose activities they are supposed to direct.

Display

It is also possible to display all instructions on the shop floor in electronic form. The key questions are whether it actually does the job better and whether it is cheaper. In the auto parts industry, instructions are usually posted in hardcopy; in computer assembly, they are displayed on screens. One might think that the computer industry is doing it to use what it sells, but there is a more compelling reason: while the auto parts industry will make the same product for four years or more, 90% of what the computer industry assembles is for products introduced within the past 12 months. While the auto parts industry many not justify the cost of placing monitors over each assembly station, what computer assemblers cannot afford is the cost and error rate of having people constantly post new hardcopy instructions.

In the auto industry, to provide quick and consistent initial training and for new product introduction in its worldwide, multilingual plants, Toyota has created a Global Production Center, which uses video and animation to teach. To this day, however, I do not believe that Toyota uses screens to post work instructions on the shop floor. In the assembly of downhole measurement instruments for oilfield services, Schlumberger in Rosharon, TX, is pioneering the use of iPads to display work instructions.

Jul 19 2012

ISO 9001: Conspicuous by Its Absence | Quality Digest

See on Scoop.it – lean manufacturing

To paraphrase the judge in My Cousin Vinny, this is a lucid, intelligent, well thought-out argument for the usefulness of ISO-9001: you don’t hear about its benefits because they come in the form of problem-prevention.

Levinson is persuasive, but I can’t help thinking that we should still be able to see before-and-after metrics of quality for companies that implement ISO-9001. The results don’t have to be immediate, but they have to exist.

See on www.qualitydigest.com

Share this:

Like this:

By Michel Baudin • Press clippings 3 • Tags: ISO, Quality