Oct 12 2022

Musings on Large Numbers

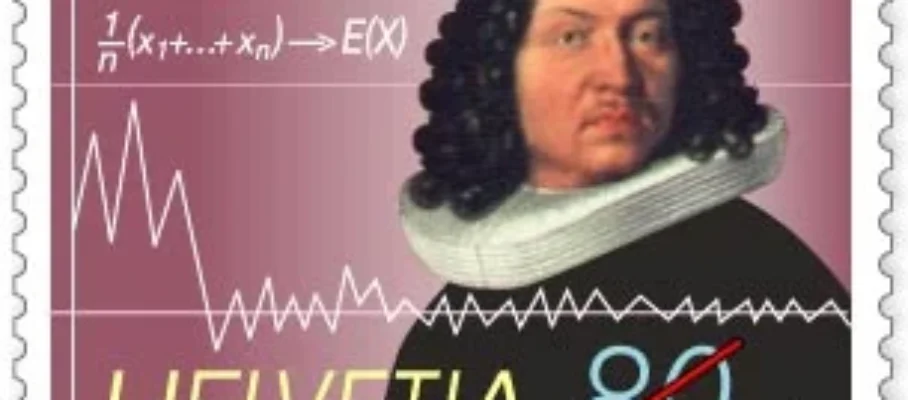

Anyone who has taken an introductory course in probability, or even SPC, has heard of the law of large numbers. It’s a powerful result from probability theory, and, perhaps, the most widely used. Wikipedia starts the article on this topic with a statement that is free of any caveat or restrictions:

In probability theory, the law of large numbers is a theorem that describes the result of performing the same experiment a large number of times. According to the law, the average of the results obtained from a large number of trials should be close to the expected value and tends to become closer to the expected value as more trials are performed.

This is how the literature describes it and most professionals understand it. Buried in the fine print within the Wikipedia article, however, you find conditions for this law to apply. First, we discuss the differences between sample averages and expected values, both of which we often call “mean.” Then we consider applications of the law of large numbers in cases ranging from SPC to statistical physics. Finally, we zoom in on a simple case, the Cauchy distribution. It easily emerges from experimental data, and the Law of Large Numbers does not apply to it.

Jan 12 2024

Gaussian (Normal) Distributions In Science

The Gaussian – also known as “Normal” – distribution is used and abused in many domains. In Manufacturing, this includes quality assurance, supply-chain management, and human resources. This is the first in a series of posts aimed at understanding the range of applicability of this tool.

Googling uses of the normal distribution produces nearly 1 million results. Yet the top ones all ignore science, even when you narrow the query to physics, and this post attempts to remedy this. For example, the Gaussian distribution plays a central role in modeling Brownian motion, diffusion processes, heat transfer by conduction, the measurement of star positions, and the theory of gases.

These matter not just because the models are useful but also because they anchor this abstraction in physical phenomena that we can experience with no more equipment than is used in a Middle School science project. This post will not help you solve a shop floor problem by the end of the day, but I hope you will find it nonetheless enlightening.

Continue reading…

Contents

Share this:

Like this:

By Michel Baudin • Laws of nature • 4 • Tags: Brownian motion, Diffusion, gaussian, Heat transfer, Normal distribution, Theory of Gases