Apr 15 2012

Metrics in Lean – Part 3 – Equipment

The aggregate metric for equipment most often mentioned in the Lean literature is Overall Equipment Effectiveness (OEE). I first encountered it 15 years ago, when a client’s intern who had been slated to help on a project I was coaching, was instead commandeered to spend the summer calculating the OEEs of machine tools. I argued that the project was a better opportunity than just taking measurements, both for the improvements at stake for the client and for the intern’s learning experience, but I lost. Looking closer at the OEE itself, I felt that it was difficult to calculate accurately, analyze, and act on. In other words, it does not meet the requirements listed in Part 1.

Jun 17 2012

Data, information, knowledge, and Lean

Terms like data and information are often used interchangeably. Value Stream Mapping (VSM), for example, is also called Materials and Information Flow Analysis (MIFA) and, in this context, there is no difference between information and data. Why then should we bother with two terms? Because “retrieving information from data” is meaningless unless we distinguish the two.

The term knowledge is used to call a document a “body of knowledge” or an online resource a “knowledge base,” when their contents might be more aptly described as dogmas or beliefs with sometimes a tenuous relationship with reality. Computer scientists are fond of calling knowledge anything that takes the form of rules or recommendations. Having an assertion in a “knowledge base,” however, does not make it knowledge in any sense the rest of the world would recognize. If it did, astrology would qualify as knowledge.

In Lean, as well as for many other topics, clarity enriches communication, and, in this case, can be achieved easily, in a way that is useful both technically and in everyday language. In a nutshell:

Authors like Chaim Zins have written theories about data, information, and knowledge that are much more complicated and I don’t believe more enlightening than the simple points that follow. They also go one step further, and discuss how you extract wisdom from knowledge, but I won’t follow them there. The search for wisdom is usually called philosophy, and it is too theoretical a topic for this blog.

Data

In his landmark book on computer programming, Don Knuth defined data as “the stuff that’s input or output.” While appropriate in context, this definition needs refinement to include data that is not necessarily used in a computer, such as the Wright Brothers’ lift measurements (See Figure 1). If we just say data is the stuff that’s read or written, this small change does the trick. It can be read by a human or a machine. It can be written on paper by hand or by a printer, it can be displayed on a computer screen, it can be a colored lamp that turns on, or even a siren sound.

Figure 1. Data example: the Wright Brothers’ lift measurements

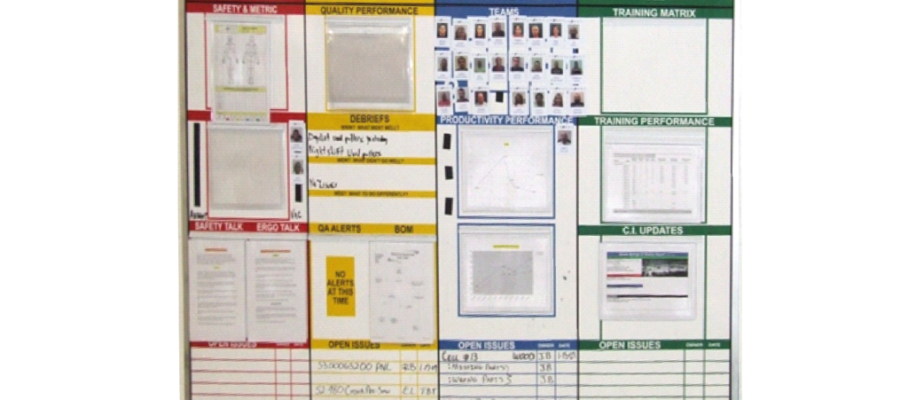

More generally, all our efforts we make a plant visible have the purpose of making it easier to read and, although it is not often presented this way, 5S is really about data acquisition. Conversely, team performance boards, kanbans andons, or real-time production monitors are all ways to write data for people, while any means used to pass instructions to machines can be viewed as writing data, whether it is done manually or by control systems.

What is noteworthy about reading and writing is that both involve replication rather than consumption. Flow diagrams for materials and data can look similar, but, once you used a screw to fasten two parts, you no longer have that screw, and you need to keep track of how many you have left. On the other hand the instructions you read on how to fasten these parts are still there once you have read them: they have been replicated in your memory. Writing data does not make you forget it. This fundamental difference between materials and data needs to be kept in mind when generating or reviewing, for example, Value Stream Maps.

Information

Information is a more subtle quantity. If you don’t know who won the 2010 Soccer World Cup and read a news headline that tells you Spain did, you would agree that reading it gave you some information. On the other hand, if you already knew it, it would not inform you, and, if you read it a second time, it won’t inform you either. In other words, information is not a quantity that you can attach to the data alone, but to a reading of the data by an agent.

If you think of it as a quantity, it has the following characteristics:

The above discussion embodies our intuitive, everyday notion of what information is. For most of our purposes — like designing a performance board for Lean daily management, an andon system, a dashboard on a manager’s screen, or a report — this qualitative discussion of information is sufficient. We need to make sure they provide content the reader does not already know, and make the world in which he or she operates less uncertain. In other words, reading the data we provide should allow readers to make decisions that are safer bets about the future.

In the mid 20th century, however, the mathematician Claude Shannon took it a step further, formalized this principle into a quantitative definition of information, and proved that there was one only one mathematical function that could be used to measure it. He then introduced the bit as its unit of measure. Let us assume that you read a headline that says “Spain defeats the Netherlands in the Soccer World Cup Final.” If you already knew that the finalists were Spain and the Netherlands and thought they were evenly matched, then the headline gives you one bit of information. If you had no idea which of the 32 teams that entered the tournament would be finalists, and, to you, they had all equal chances, then, by telling you it was Spain and the Netherlands, the headline gives you an additional 8.9 bits.

Over the decades, his theory has had applications ranging from the design communication networks to counting cards in blackjack, more than to help manufacturers understand factory data. It has a use, however, in assigning an economic value to the acquisition of information, and thereby justify the needed investment.

Knowledge

On November 3, 1948, the readers of the Chicago Tribune received information in the “Dewey defeats Truman” headline (See Figure 2). None of them would, however, describe this information as knowledge, just because it was not true. It should not need to be said, and, outside the software industry, it doesn’t. Software marketers, however, have muddied the water be calling rules derived from these assertions “knowledge,” regardless of any connection with reality. By doing so, they have erased the vital distinction between belief, superstition or delusion on one side and knowledge on the other.

Figure 2. When information is not knowledge

As Mortimer Adler put it in Ten Philosophical Mistakes (pp. 83-84), “it is generally understood that those who have knowledge of anything are in possession of the truth about it. […] The phrase ‘false knowledge’ is a contradiction in terms; ‘true knowledge’ is manifestly redundant.”

When “knowledge bases” were first heard from in the 1980’s, they contained rules to arrive at a decision, and only worked well with rules that were true by definition. For example, insurance companies have procedures to set premiums, which translate well to “if-then” rules. A software system applying these rules could then be faster and more accurate than a human underwriter retrieving them from a thick binder.

On the other hand, in machine failures diagnosis, rules are true only to the extent that they actually work with the machine; this is substantially more complex and error-prone that applying procedures, and the rule-based knowledge systems of the 1980’s were not successful in this area. Nowadays, a “knowledge base” is more often a forum where users of a particular software product post solutions to problems. While these forums are useful, there is no guarantee that their content is, in any way, knowledge.

The books on data mining are all about algorithms, and assume the availability of accurate data. In real situations, and particularly in manufacturing, algorithms are much less of a problem than data quality. There is no algorithm sophisticated enough to make wrong data tell a true story. The key point here is that, if we want to acquire knowledge from the data we collect, the starting point is to make sure it is accurate. Then we can all be credible Hulks (See Figure 3).

Figure 3. The Credible Hulk (received from Arun Rao, source unknown)

Share this:

Like this:

By Michel Baudin • Technology • 3 • Tags: 5S, Information systems, Information technology, IT, KPI, Visual management