Oct 14 2011

Revisiting Pareto

Revisiting Pareto is a paper about the application of the 80/20 law, also known as the law of the vital few and the trivial many and the Pareto principle. It presents new ways of applying it to manufacturing operations and is scheduled for publication in Industrial Engineer Magazine in January, 2012. As a preview, I am including here the following:

- The complete abstract of the paper as it will be published.

- A section on a simulation of a game leading to the emergence of a Pareto distribution of wealth among players, which was cut from the article because it is meant to stimulate thinking and does not contain actionable recommendations.

Abstract of the paper as scheduled for publication in 1/2012

The Pareto principle, so named by J.M. Juran in the 1940s, is also known as the 80/20 law, the law of the vital few and the trivial many, and ABC classification. It is a simple yet powerful idea, generally accepted but still underutilized in business. First recognized by Vilfredo Pareto in the distribution of farm land in Italy around 1900, it applies in various forms to city populations as counted in the 2010 census of the US, as well as to quality defects in manufacturing, the market demand for products, the consumption of components in production, and various metrics of item quantities in warehouses.

The key issues in making it useful are (1) to properly define quantities and categories, (2) to collect or retrieve the data needed, (3) to identify the actions to take as a result of its application and (4) to present the analysis and recommendations in a compelling way to decision makers.

In the classical Pareto analysis of quality problems, defect categories only partition the defectives if none has more than one type of defect. When multiple defects can be found on the same unit, the elimination of the tallest bar may increase the height of its runner-up. With independent defect categories and an electronic spreadsheet, this is avoided by calculating the yield of every test in the sequence instead of simply counting occurrences, and multiplying these yields to obtain the yield of a sequence of tests. We can then plot a bar chart of the relative frequencies each defect in the population that is actually tested for it, and, as a cumulative chart, the probability of observing at least one of the preceding defects.

When analyzing warehouse content, there are usually too many different items to count physically, and we must rely on data in the plant’s databases. The working capital tied up by each item is usually the most accessible. But physical characteristics like volume occupied, weight or part count are often scattered in multiple databases and sometimes not recorded. The challenge then is to extract, clean, and integrate the relevant data from these different sources.

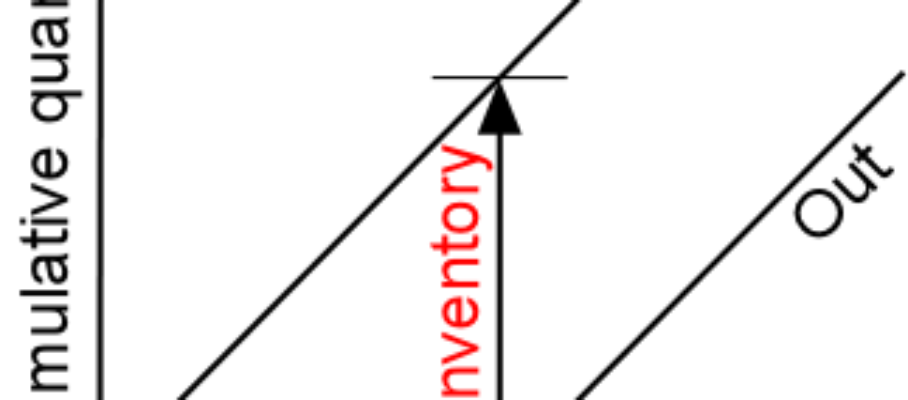

In both supply chain and in-plant logistics, the design of the system should start with an analysis of part consumption by a production line in terms of frequency of use, another quantity that is not additive across items. In this application, the classical Pareto chart is replaced by the S-curve, which plots the number of shippable product units as a function of the frequency ranks of the components used, and thus provides a business basis for grouping them into A-, B- and C-items, also known as runners, repeaters and strangers.

The presentation of the results should be focused on communication, not decoration, and use graphics only to the extent that they serve this purpose.

Emergence of the Pareto distribution in a simulation

While the Pareto principle can be easily validated in many contexts, we are short on explanations as to why that is. We do not provide one, but we show how, in a simulation of a multiple-round roulette game in which each player’s winnings at each round determine his odds in the next one, the distribution of chips among the players eventually comes close to the 80/20 rule. This happens even though it is a pure game of chance: players start out with equal numbers of chips and have no way to influence the outcome.

Assume we use a roulette wheel to allocate chips among players in multiple rounds, with the following rules:

- On the first round, the players all have equal wedges of the roulette wheel, and the roulette is spun once for each chip.

- On each following round, each player’s wedge is proportional to the amount he won on the preceding round.

This is a pure game of chance. There is nothing players can do to influence the outcome, and the players who do best in one round are set to do even better on the next one. It is also a zero-sum game, in that whatever a player wins comes out of the other players’ hands. There is no safety net: a player with no chips after any round is out of the game. If you know the present state of the game –how many chips the player in each rank has– then the results of future rounds is independent of the path by which you arrived at the present state. Technically, it is a random walk and a Markov process. The concept of the game is shown in Figure 1.

It could be viewed as a representing a simplified Oklahoma Land Rush, where farmers of equal talent are initially given identical plots of land. Even in the first round, some plots will have higher crop yields than others. If the average crop is needed to survive, the farmers with a surplus sell it to the farmers with a shortfall in exchange for land and start the next season with land in proportion to the crop they had on the previous one. The players could also be functionally equivalent, similarly priced consumable products sold in the same channels, like toothpaste or soft drinks, and the chips would be the buyers of these products.

If we run this game through enough rounds, do we tend towards a distribution where 20% of the players hold 80% of the chips, or do all the chips end up in the hands of only one player? We simulated this game in software with 1 million chips to be divided among 1,000 players in 100,000 rounds, and the simulation takes about 2 minutes to run on a desktop PC.

The results show that the “wealth distribution” is stable, beyond 10,000 rounds, with 23% of the players collectively holding 80% of the chips Figure 2 shows the distribution after 100,000 rounds, which roughly satisfies the 80/20 law, but not recursively, because it takes the top 49% of the players to account for 96% of the chips, instead of 36%.

Figure 2. Chip distribution after 100,000 rounds

Figure 2. Chip distribution after 100,000 rounds

Figure 3 shows other indicators of the simulation results every 10,000 rounds. The proportion of the top players holding 80% of all the chips oscillates between 21.5% and 25%, with a very slow downward trend. The number of chips held by the top 10 players also varies, but is essentially flat. Figure 3. Indicators every 10,000 rounds

Figure 3. Indicators every 10,000 rounds

This pure game of chance, which favors at every round the winners of the previous round, does not rapidly concentrate all the chips in a few hands. Instead, it produces a distribution that is as close to Pareto’s as anything we can observe in demographics, quality, or production control. This suggests the following two conclusions:

- The reason the 80/20 law is so often verified with actual data that it does not require some categories to be “better” than others. A long random walk is sufficient to produce it, even when you start out with equal quantities assigned to each category. The products that are Runners are not necessarily better in any way than the Repeaters or the Strangers. From the point of view of Manufacturing, it does not matter why or how they became Runners: as long as they dominate the volume, they need dedicated resources.

- If some categories are intrinsically better able to attract the quantity to be distributed than others, we can expect the distribution to be more unequal than 80/20. If the players are farmers, differences in skill may skew the distribution further. If they are products competing for customers, the better products may take over the market, but it does not always happen.

The simulation has shown the limitations of the conclusions drawn from a Pareto chart. When a product is a Runner, by definition it deserves a dedicated production line. That is a direct consequence of its being at the top of the chart. It does not, however, imply that it is “better” in any way than that the other products. The simulation arrived at a near 80/20 distribution of the chips without assuming any difference in the players’ ability to acquire chips: the roulette wheel has no favorites, and the players can do nothing to influence the outcome.

Oct 19 2011

The Role of Technology in Continuous Improvement

In an article on this topic in Industry Week today, Ralph Keller asserts that Continuous Improvement is focused on business processes rather than technology.

However, if you wrap tinfoil around the feet of a welding fixture to make it easier to clean, replace bolts with clamps on a machine to reduce setup time, or mount a hand tool on the machine on which it is used, it usually counts as Continuous Improvement but involves technical changes to work that I don’t think anyone would describe as business processes.

Yes, Continuous Improvement is done without expensive technology, but it does involve cheap technology.

Ralph Keller also reminds us that Continuous Improvement is not “rocket science,” which implies that it is easier. I agree that it is different, but not easier. I don’t know any rocket scientist with the skills to facilitate Continuous Improvement.

Share this:

Like this:

By Michel Baudin • Management 0 • Tags: Continuous improvement, industrial engineering, Kaizen, Lean manufacturing, Manufacturing engineering