Oct 27 2023

Is SPC Still Relevant? | D.C. Fair & S. Hindle | Quality Digest

“Today’s manufacturing systems have become more automated, data-driven, and sophisticated than ever before. Visit any modern shop floor and you’ll find a plethora of IT systems, HMIs, PLC data streams, machine controllers, engineering support, and other digital initiatives, all vying to improve manufacturing quality and efficiencies.

That begs these questions: With all this technology, is statistical process control (SPC) still relevant? Is SPC even needed anymore? Some believe manufacturing sophistication trumps SPC technologies that were invented 100 years ago. But is that true? We the authors believe that SPC is indeed relevant today and can be a vitally important aid to manufacturing.”

Source: QualityDigest

Mar 5 2024

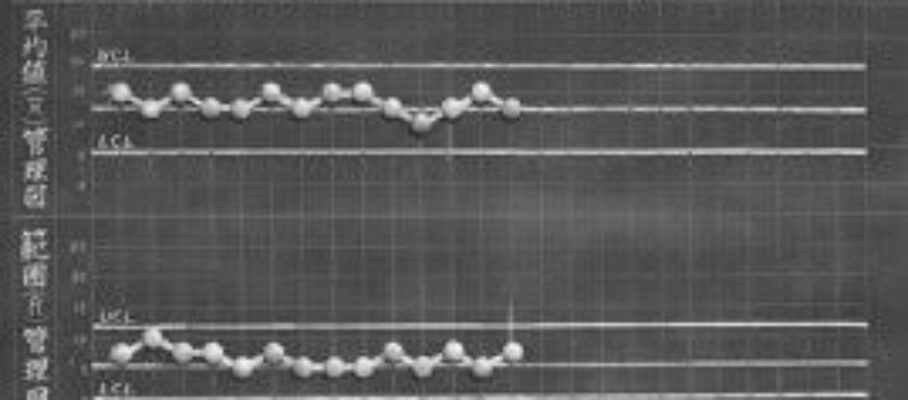

Process Control and Gaussians

The statistical quality profession has a love/hate relationship with the Gaussian distribution. In SPC, it treats it like an embarrassing spouse. It uses the Gaussian distribution as the basis for all its control limits while claiming it doesn’t matter. In 2024, what role, if any, should this distribution play in the setting of action limits for quality characteristics?

Continue reading…

Contents

Share this:

Like this:

By Michel Baudin • Data science, Technology • 1 • Tags: Control Charts, Control Limits, FMEA, gaussian, Normal distribution, pFMEA, process control, Quality, SPC