Nov 18 2016

The Art of the Question | Robert W. “Doc” Hall | Compression Institute

“Twenty-five years ago, I tried to coach adult college students to seek and solve problems using the classic Deming PDCA Circle. In classrooms, students were unused to identifying their own problems rather than having them pre-defined. The first time through this exercise, over half did not reflect on a problem to seek root cause. Instead, they went shopping for a gizmo, a program, or a recipe to fix the problem – a quick-fix mentality.”

Sourced through the Compression Institute

Michel Baudin‘s comments: 33 years ago, Robert W. Hall wrote Zero Inventories, the first original, technically meaty book in English about Lean Manufacturing, and I have had great respect for him ever since.

Nov 7 2018

Where Problem-Solving Goes Wrong | Gregg Stocker | Lessons in Lean

“[…] Whenever someone asks for input on a problem-solving A3, I tend to look for the red flags or areas in each section where help is most commonly needed. The key is to help people understand that the process is about investigating, reflecting, and learning, not filling in the form. It is far too common, especially early in a person’s development, to force-fit information into the boxes just to appease someone else and show that the process was followed. […]”

Sourced through Lessons in Lean

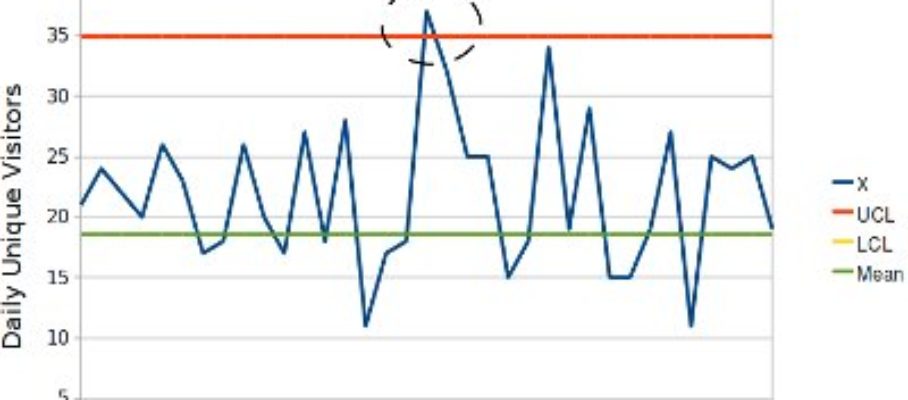

Michel Baudin‘s comments: Companies use forms to make teams answer every question. Filling out forms, however, often degenerates into the formalism Gregg describes. Instead of reviewing content, managers just check that the team has entered something in every box.

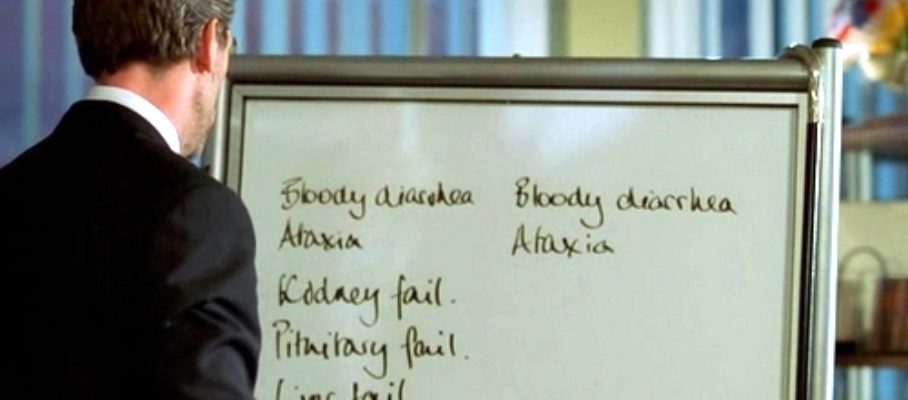

Gregg also says nothing about immediate countermeasures to “stop the bleeding.” Assume, for example, that customers start returning defectives. The first step is to prevent more defectives from escaping. Meanwhile, you investigate root causes, implement permanent solutions, and validate them. The point of the process is to go beyond immediate countermeasures and dismantle them once they are no longer useful.

#problemsolving, #A3

Share this:

Like this:

By Michel Baudin • Blog clippings • 2 • Tags: A3, problem-solving